Review Subscribe

Google Assistant for iOS

So yesterday at the 2017 Google I/O developers conference it was announced that last year's big hit the Google Assistant is now available for iOS. Now even though I am heavily invested in the Apple ecosystem I am as much in that of their rival, so this news really excited me.

Now like all good things us guys in the UK don’t get it straight away but as many of us know this is no barrier to entry if you have a US iTunes account, so with that I went ahead and added it to my devices.

So why be excited about the Google Assistant on my iPhone? I’ve had a virtual assistant for years in the form of Siri but she has let me down year on year and in terms of mobile AI it is easy to say she is the worst on the market which is such a misstep for Apple and the more time I spend with my Alexa I know I’m not wrong, it could be so much better.

Now the Google Assistant on iOS is never going to be the powerhouse it in on the Google Pixel and compatible phones, they just don’t have the hooks in the OS but even without this it still blows Siri out of the water.

No matter what phone you have the advantage of the native AI assistant is that it is easily accessible and integrates really well. On my iPhone and my Apple Watch it is a quick depress of the home button and you’re ready to go. But Google have thought about this and they have added a widget that you can access by simply swiping left on your screen and on hitting the assistant icon it is immediately listening.

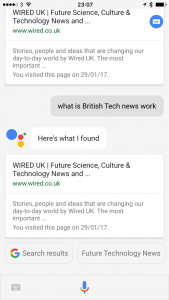

Now in terms of what it can do on the most basic level it has no advantage over its rivals Siri, Alexa or Cortana. Asking for what the weather is, setting a timer or asking basic questions is the same cross-platform but it is the contextual nature of the Google Assistant that makes it a viable alternative. On asking a question it can easily follow on, so if I ask what the weather is today it will say ‘it’s going to rain’ and then if I ask ‘what about tomorrow’ it still knows that I’m still asking if anything will fall from the sky. Now I’m not knocking the competition as they do something very similar but the text suggestions from Google are really useful. So if you ask a question using your voice it will answer you and then suggest what you might want to ask next and this can be achieved with the simple press of a button.

The main advantage of this version of the Assistant is the fact that it will take a textual input as well as voice, so if you’re not comfortable with talking to your device then you can surreptitiously enter what you want to know and carry on the conversation.

Like I say I have a lot of stuff in Google and the fact to be able to pull in my calendar events and email is a killer feature and it is always learning. Now I know that many people don’t want Google analysing their day to day activities but if you’re comfortable with that this could really make a world of difference if you want some basic AI functionality on your device.

Google I/O did demonstrate some of the upcoming functions of its assistant such as Google Lens which showed it learning through what it saw through your photo sensor and the awesome demo of connecting to a wifi network just by scanning the sticker on the back of your router. It shows that Google with the mass collection of user data gives them the edge and their machine learning is beyond that of their counterparts but it does come at a cost, ours. But don’t we already put ourselves out there? Is it just time to accept there are some advantages to this data prostitution? That is your decision alone, a decision not taken by Apple hence the slip in Siri’s functionality. What is the best path to take?

There have been a couple of occasions where the assistant tells me ‘I’m still learning’ but this is to expected but I will persist and I will update you in anticipation of the UK launch of the app.

Updates coming soon.

Author

Paul Wright

The Ninja Foodi

The Ninja Foodi Rii RK100 Keyboard

Rii RK100 Keyboard Duke Nukem World Tour

Duke Nukem World Tour 2 Quid Wireless Charger

2 Quid Wireless Charger Streets of Rage 4

Streets of Rage 4